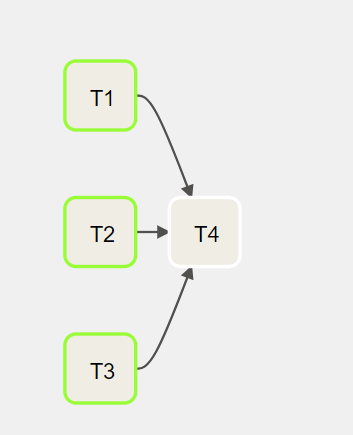

Parallel tasks DAG

Let's assume that there are four tasks, T1, T2, T3, and T4, each one of them will take 10 seconds to run. Task T1, T2, T3 don't depend on others, while T4 depends on the success of T1, T2, and T3.

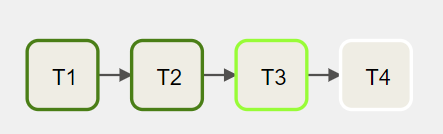

In an orchestration system that does not support parallel tasks, these tasks run sequentially one after another. It should take around 40 seconds to finish.

However, by design, Airflow supports concurrency, meaning that the tasks that don't depend on others can run in parallel.

The above DAG can be updated, and it should take ~20 seconds to finish.

The code of the DAG looks like this:

Create a file named 3_parallel_tasks_dag.py that contains the following code:

from datetime import datetime, timedelta

from airflow import DAG

from airflow.operators.bash_operator import BashOperator

default_args = {

"owner": "airflow",

"depends_on_past": False,

"retries": 1,

"retry_delay": timedelta(minutes=5),

"email": ["airflow@example.com"],

"email_on_failure": False,

"email_on_retry": False,

}

with DAG(

"3_parallel_tasks_dag",

default_args=default_args,

description="parallel tasks Dag",

schedule_interval="0 12 * * *",

start_date=datetime(2021, 12, 1),

catchup=False,

tags=["custom"],

) as dag:

t1 = BashOperator(

task_id="T1",

bash_command="echo T1",

)

t2 = BashOperator(

task_id="T2",

bash_command="echo T2",

)

t3 = BashOperator(

task_id="T3",

bash_command="echo T3",

)

t4 = BashOperator(

task_id="T4",

bash_command="echo T4",

)

# t1 >> t2 >> t3 >> t4

[t1, t2, t3] >> t4